As a Data Service provider in the UK, we are constantly on the lookout for solutions to the difficulties we encounter daily. Working on real-world challenges has exposed us to several complications in terms of both time and computing. Many times, the traditional machine learning and deep learning algorithms have failed to operate, causing my computer to crash.

During the lockout, we discovered a great new science fiction series called Devs on Hulu. Devs investigate quantum computing and scientific research that is now underway all around the world. This got us thinking about Quantum Theory, Quantum Computing, and how Quantum Computers may be used to predict the future.

After doing some more research, we came across Quantum Machine Learning (QML), a term that we were unfamiliar with at the time. This area is both intriguing and practical; it has the potential to assist tackle problems involving computational and temporal difficulties, such as the ones we have experienced. As a result, I picked QML as a study topic and wanted to share my findings with everyone.

What do you understand about Quantum Computing?

Quantum computing uses quantum physics features to address computer difficulties.

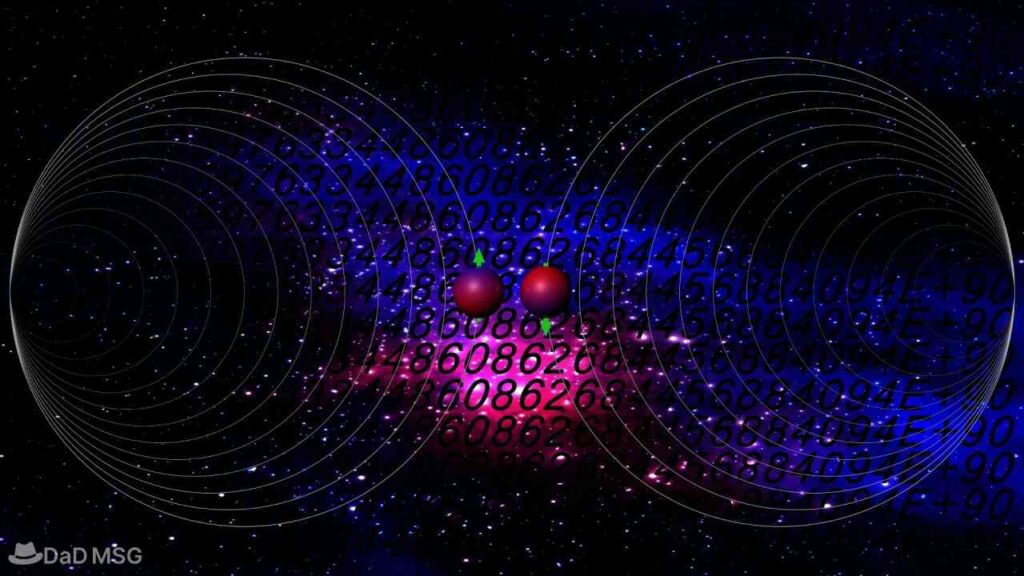

Answers are determined through sophisticated sequences based on manipulating these 1s and 0s in traditional computing, which uses ‘bits’ that can have a value of 1 or 0. A quantum bit is the quantum computing equivalent of a ‘bit’ (qubit). A qubit can have a value of 1 or 0, but it may also have both values at the same time due to quantum behaviour. This means that a qubit always has the ‘correct’ value.

(Tossing a coin is a handy analogy when thinking about qubits.) It has a value of heads or tails when it is on the table, but it can be deemed to have both when it is spinning.)

Because a quantum computer’s qubits can be in either a 0 or 1 state at the same time, it can test all of the possibilities at once. However, because qubits include all correct and incorrect numbers, the output is both right and wrong, requiring algorithms to eliminate the incorrect responses and leave just the correct one. Nonetheless, quantum computing only performs the computational process of evaluating the possibilities once, rather than several times consecutively.

2N, where N is the number of qubits, may be used to calculate a quantum computer’s ‘power.’ A quantum computer with 50 qubits, for example, could manage over one thousand trillion numbers. More numbers might be processed by a 300-qubit computer than there are atoms in the universe! Quantum computers are appealing for increasingly complicated computing tasks because they can handle large volumes of data continuously.

What are its functions?

-

Linear Algebraic Problems Solved by Quantum Machine Learning

Matrix operations on vectors in a high-dimensional vector space are used to handle a wide range of Data Analysis and Machine Learning challenges.

The quantum state of the qubits in quantum computing is a vector in a 2a-dimensional complex vector space. This area sees a lot of matrix manipulations. Quantum computers can handle typical linear algebraic problems in polynomial time, such as the Fourier Transformation, identifying eigenvectors and eigenvalues, and solving linear sets of equations over 2a-dimensional vector spaces (and exponentially faster than classical computers due to the Quantum Speedup). The Harrow, Hassidim, and Lloyd (HHL) algorithm is one example.

-

Principal Component Analysis Quantum

Principal Component Analysis is a dimensionality reduction approach that is applied to huge datasets to reduce their dimensionality. As we must determine which variables to reduce without losing crucial information, dimensionality reduction comes at the expense of accuracy. If done correctly, it makes the machine learning work considerably easier because dealing with a smaller dataset is easier.

For example, if we have a dataset with 10 input attributes, a traditional computer may efficiently do principal component analysis. However, if the input dataset comprises a million features, traditional principal component analysis approaches would fail since visualising the relevance of each variable will be difficult.

The computation of eigenvectors and eigenvalues is another challenge with traditional computers. The greater the collection of related eigenvectors and eigenvalues, the higher the dimensionality of the input. Quantum computers may handle this problem quickly and efficiently by selecting a data vector at random from Quantum Random Access Memory (QRAM). It uses qubits to map the vector into a quantum state.

Quantum Principal Component Analysis yields a summed vector with logarithmic qubits. A dense matrix is formed by the chosen random vector. The covariance matrix is represented by this matrix.

We may take the quantum version of any data vector and deconstruct it into its primary components by repeatedly sampling the data and utilising a technique known as density matrix exponentiation in combination with the quantum phase estimation method (which determines the eigenvectors and eigenvalues of the matrices). As a result, both computational and temporal complexity is lowered exponentially.

-

Quantum Support Vector Machines (SVM’s)

The Support Vector Machine (SVM) is a well-known machine learning technique that may be used for both classification and regression. It is used to categorise linearly separable datasets into their corresponding classes for classification tasks. If the data is not linearly separable, the dimensions are raised until the data is linearly separable.

SVM can only be performed up to a specific number of dimensions on traditional machines. It will be difficult after a certain point since such machines lack sufficient processing capability.

Support Vector Algorithm may be performed much quicker on quantum computers. It works effectively and quickly thanks to the concept of superposition and entanglement.

-

Quantum Computation

It is termed optimisation when you are aiming to provide the best potential product using the fewest resources feasible. In a machine learning model, optimisation is used to enhance the learning process so that it can deliver the most appropriate and accurate predictions.

The basic goal of optimisation is to reduce the loss function to its smallest value. A larger loss function implies more unreliable and inaccurate outputs, which can be expensive and lead to incorrect predictions.

Most machine learning approaches need iterative performance tuning. Quantum optimisation techniques show that they can help with machine learning optimisation challenges. Quantum entanglement allows several copies of the current solution to be created, each encoded in a quantum state. At each phase of the machine learning method, they are used to enhance that solution.

-

Quantum Learning in Depth

Quantum computing and deep learning can be coupled to shorten the time it takes to train a neural network. We may propose a new framework for deep learning and do underlying optimisation using this strategy. On a real-world quantum computer, we can simulate conventional deep learning methods.

The computational complexity of multi-layer perceptron topologies grows as the number of neurons increases. Dedicated GPU clusters can be employed to boost performance and cut training time in half. However, when compared to quantum computers, even this will rise.

Quantum computers are built in such a way that the hardware may resemble a neural network rather than the software that is utilised in traditional computers. A qubit is used to represent a neuron, which is the fundamental unit of a neural network. As a result, a quantum system with qubits may operate as a neural network and perform deep learning applications faster than any traditional machine learning method.

Conclusion

Quantum Machine Learning is still in its infancy as a theoretical area. Quantum Machine Learning’s major objective is to accelerate things by leveraging what we know about quantum computing for machine learning. Quantum Machine Learning theory incorporates components from conventional Machine Learning theory and applies them to quantum computing.